Manage Kubernetes locally with RKE DIND

Rancher Kubernetes Engine (RKE) is an open-source Kubernetes installer written in Golang which is easy to use and highly customizable, it uses containerized deployment for kubernetes components and can work on many platforms, it support installation on bare-metal and virtualized servers plus it supports integeration with many cloud providers including AWS, Openstack, VSphere, and Azure. For more information about RKE you can checkout the official documentation.

Managing Kubernetes Locally

There are many options to run Kubernetes locally, the most popular option is to use Minikube which runs a single node kubernetes cluster inside a virtual machine on your local computer, Minikube supports many kubernetes features and can run on any platform, more options are also available to install Kubernetes locally that includes Minishift which installs a community version of Openshift for local developement, also microk8s which provides a single command installation of the latest Kubernetes version and is considered very fast way to get Kubernetes locally.

One of the known issues with these options is that they only provide a single node installations for kubernetes and they can lack flexibility in customizing and configuring Kubernetes.

Introducing RKE DIND

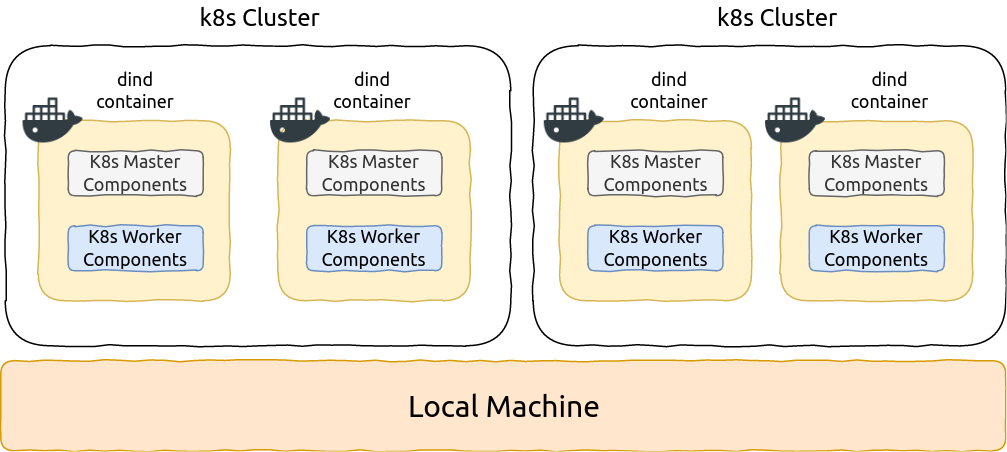

RKE Docker In Docker feature allows the user to install multi-node Kubernetes installation locally, and the only requirements is having Docker installed on your local machine.

How It Works

RKE DIND works simply by treating dind containers as Kubernetes nodes, each DIND container can has master or worker components or both, in RKE configuration a node be a controlplane node which has all master k8s components except etcd, etcd node which has etcd running, or worker node which has worker Kubernetes components, also any node can be combination of one of the three roles.

Each DIND container runs the official docker:17.03-dind image, currently this image is hardcoded in rke code, however this can be customizable in the future, since the docker:17.03-dind image defaults to vfs storage driver which is very slow and inefficient rke runs each container with the host’s storage driver,

DIND Networking

Initially RKE DIND was implemented to have a separate docker network for each kubernetes cluster, user was able to create a new network or specify a docker network name to run the cluster within it.

However this implementation can be proven complicated because it will have the user keep track of each container IP, and RKE will fail with an error if it finds any ip conflicts.

The alternative was to make RKE DIND more flexible with containers addresses, the user will only have to add the node names in the configuration file, and RKE will automatically run the containers in the default docker bridge network and fetch their addresses and use them for the installation.

RKE DIND Docker Tunnel

RKE uses different tunnels to connect to the kubernetes nodes, by default the CLI uses SSH tunnel and connects to the Docker daemon on this node, I described this feature in my last post.

If you are going to use rke library you can specify a custom tunnel dialer by sending a dialer factory to RKE function:

func ClusterUp(ctx context.Context, dialersOptions hosts.DialersOptions, flags cluster.ExternalFlags) (string, string, string, string, map[string]pki.CertificatePKI, error)

Where dialersOptions has three dialers:

type DialersOptions struct {

DockerDialerFactory DialerFactory

LocalConnDialerFactory DialerFactory

K8sWrapTransport k8s.WrapTransport

}

DockerDialerFactory is responsible for connecting to the nodes, the dialer factory has a signature:

type DialerFactory func(h *Host) (func(network, address string) (net.Conn, error), error)

The dialer factory for DIND container connects using TCP protocol to the docker daemon on port 2375:

func DindConnFactory(h *Host) (func(network, address string) (net.Conn, error), error) {

newDindDialer := &dindDialer{

Address: h.Address,

Port: DINDPort,

}

return newDindDialer.Dial, nil

}

func (d *dindDialer) Dial(network, addr string) (net.Conn, error) {

conn, err := net.Dial(network, d.Address+":"+d.Port)

if err != nil {

return nil, fmt.Errorf("Failed to dial dind address [%s]: %v", addr, err)

}

return conn, err

}

Example

The post will test RKE DIND by running rke up on a local Ubuntu 16.04 machine with Docker engine 17.03.2-ce, the example will run 2 clusters each with 3 nodes:

I will be using rke v0.2.0-rc3, the YAML configuration files for the 2 clusters will be:

Cluster #1 cluster1.yml

nodes:

- address: cluster1-node1

user: docker

role: [controlplane,worker,etcd]

- address: cluster1-node2

user: docker

role: [worker]

- address: cluster1-node3

user: ubuntu

role: [worker]

Cluster #2 cluster2.yml

nodes:

- address: cluster2-node1

user: docker

role: [controlplane,worker,etcd]

- address: cluster2-node2

user: docker

role: [controlplane,worker,etcd]

- address: cluster2-node3

user: ubuntu

role: [controlplane,worker,etcd]

To run the first cluster, you can run the following command:

# rke up --dind --config cluster1.yml

WARN[0000] This is not an officially supported version (v0.2.0-rc3) of RKE. Please download the latest official release at https://github.com/rancher/rke/releases/latest

INFO[0000] [dind] Pulling image [docker:17.03-dind] on host [unix:///var/run/docker.sock]

INFO[0005] [dind] Successfully pulled image [docker:17.03-dind] on host [unix:///var/run/docker.sock]

INFO[0005] [dind] Successfully started [rke-dind-cluster1-node1] container on host [unix:///var/run/docker.sock]

INFO[0006] [dind] Successfully started [rke-dind-cluster1-node2] container on host [unix:///var/run/docker.sock]

INFO[0006] [dind] Successfully started [rke-dind-cluster1-node3] container on host [unix:///var/run/docker.sock]

...

INFO[0147] Finished building Kubernetes cluster successfully

And for the second cluster:

# ./rke_linux-amd64 up --dind --config cluster2.yml

WARN[0000] This is not an officially supported version (v0.2.0-rc3) of RKE. Please download the latest official release at https://github.com/rancher/rke/releases/latest

INFO[0000] [dind] Successfully started [rke-dind-cluster2-node1] container on host [unix:///var/run/docker.sock]

INFO[0000] [dind] Successfully started [rke-dind-cluster2-node2] container on host [unix:///var/run/docker.sock]

INFO[0000] [dind] Successfully started [rke-dind-cluster2-node3] container on host [unix:///var/run/docker.sock]

...

INFO[0177] Finished building Kubernetes cluster successfully

As you can see the first cluster took about 2 minutes and 27 seconds to be provisioned, and the second cluster took about 3 minutes to be provisioned.

You can see the docker-in-docker containers on the local machine:

# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

4237db7f023b docker:17.03-dind "sh -c 'mount --ma..." 6 minutes ago Up 6 minutes 2375/tcp rke-dind-cluster2-node3

dd29ffe9016d docker:17.03-dind "sh -c 'mount --ma..." 6 minutes ago Up 6 minutes 2375/tcp rke-dind-cluster2-node2

f4138bb0cc2e docker:17.03-dind "sh -c 'mount --ma..." 6 minutes ago Up 6 minutes 2375/tcp rke-dind-cluster2-node1

89a1efc02bb8 docker:17.03-dind "sh -c 'mount --ma..." 13 minutes ago Up 13 minutes 2375/tcp rke-dind-cluster1-node3

2d11ecb3ce08 docker:17.03-dind "sh -c 'mount --ma..." 13 minutes ago Up 13 minutes 2375/tcp rke-dind-cluster1-node2

df1d8cdd36f6 docker:17.03-dind "sh -c 'mount --ma..." 13 minutes ago Up 13 minutes 2375/tcp rke-dind-cluster1-node1

Accessing The DIND Clusters

RKE will place a kube_config_* file for each cluster after finishing the installation, to use any of them you can just point to it using KUBECONFIG env variable or using –kubeconfig option with kubectl:

# KUBECONFIG=kube_config_cluster1.yml kubectl get nodes

NAME STATUS ROLES AGE VERSION

cluster1-node1 Ready controlplane,etcd,worker 16m v1.12.4

cluster1-node2 Ready worker 16m v1.12.4

cluster1-node3 Ready worker 16m v1.12.4

# KUBECONFIG=kube_config_cluster2.yml kubectl get nodes

NAME STATUS ROLES AGE VERSION

cluster2-node1 Ready controlplane,etcd,worker 9m14s v1.12.4

cluster2-node2 Ready controlplane,etcd,worker 9m14s v1.12.4

cluster2-node3 Ready controlplane,etcd,worker 9m15s v1.12.4

Limitations

-

Currently RKE DIND feature only works on Linux platforms, installation on Mac OS X is not yet supported.

-

Dind containers are not customizable including:

- Docker version

- Dind subnet

-

Doesn’t run with systemd-resolved: each dind container mounts

/etc/resolv.conffrom the localhost, however withsystemd-resolvedenabled the nameserver will be set to127.0.0.1and will fail to connect.